Taking Leaves Offline without Cluster Downtime (MemSQL Ops)

Please follow this guide to learn how to migrate to SingleStore tools.

This topic does not apply to SingleStore Managed Service.

Occasionally hosts need to be taken offline for maintenance (upgrading memory, etc). This can present a challenge if these hosts are home to one or more leaf nodes.

By following the steps below, you can detach leaf nodes from a cluster, take the host offline for maintenance, and attach the leaves back to the cluster following maintenance. This can all be done without downtime to the cluster.

Assumptions:

-

The steps below assume the host IP addresses will not change during maintenance.

-

The steps below assume the cluster is configured for High Availability (redundancy 2). If both leaves in a paired group of leaves are detached from the cluster, the cluster will become unavailable and downtime will be experienced. For this reason only one availability group of leaves should be detached at a time.

Step 1: Enable manual cluster control of the cluster

Manual cluster control is enabled by running the following command:

memsql-ops cluster-manual-control --enable

For more information on this command, see the reference.

The cluster is now under manual control. We will not automatically restart stopped nodes, update license files, etc.

Enabling manual cluster control will prevent the leaf nodes we remove from automatically being re-added to the cluster.

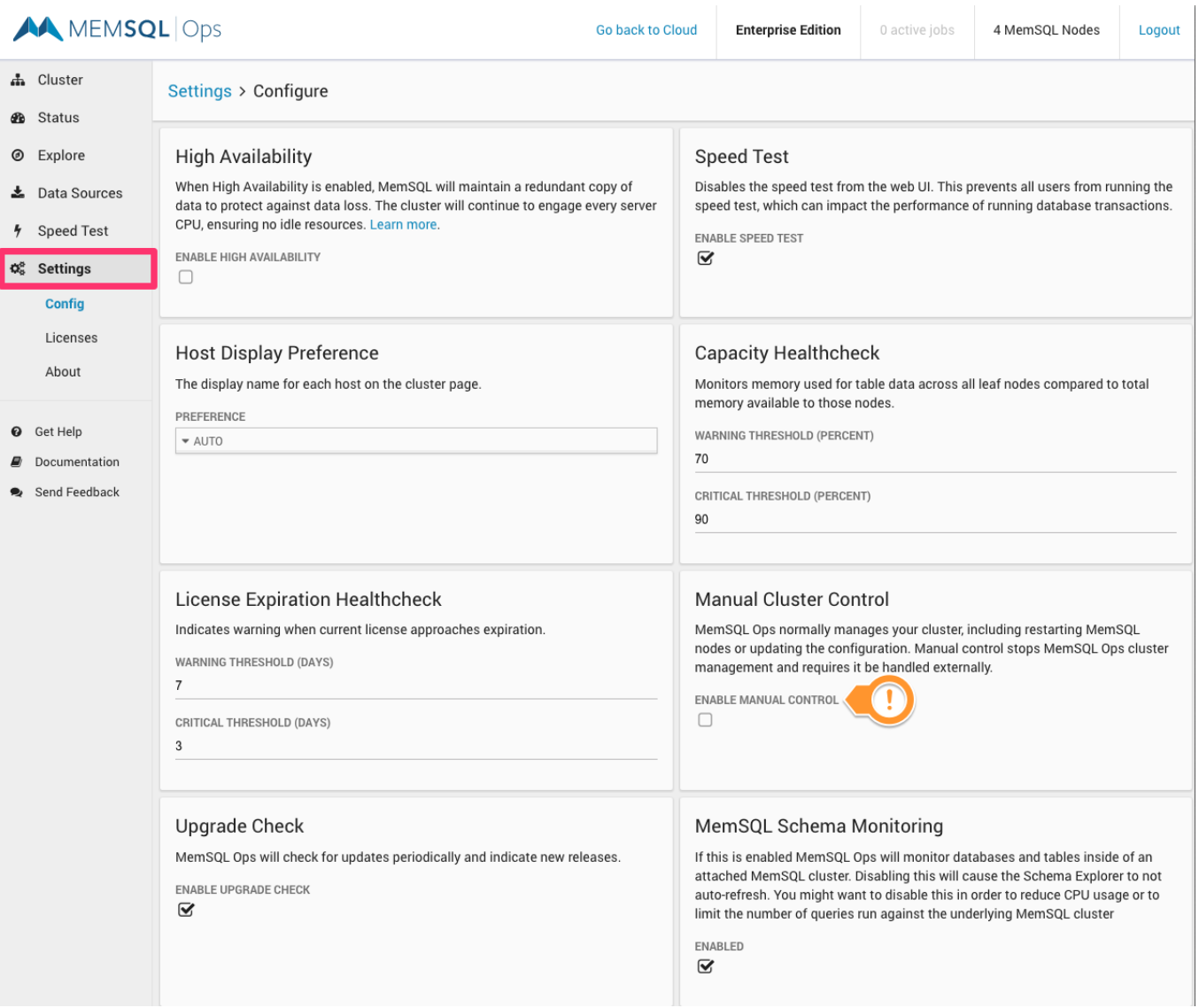

You can check the current status (enabled or disabled) of manual cluster control in the MemSQL Ops Dashboard:

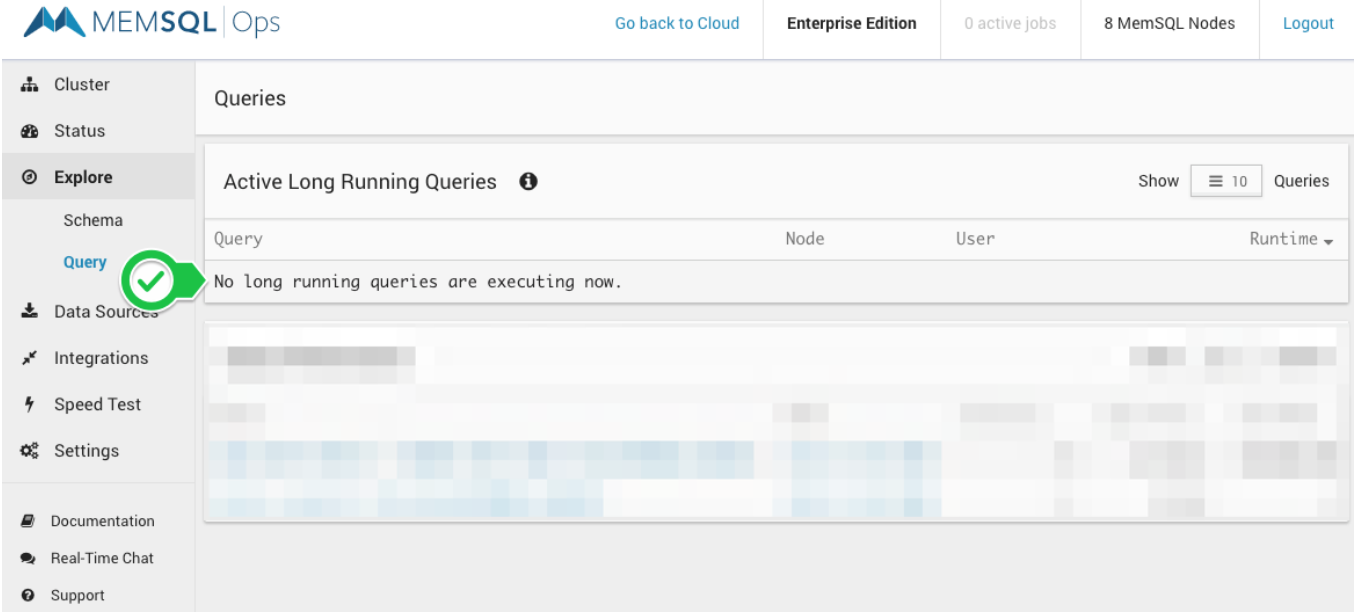

Step 2: Check long running queries section of Ops web UI

Before removing leaves make sure there are no long running queries present in the cluster:

Step 3: Ensure all database partitions are balanced

Read the Understanding Orphaned Partitions topic to verify if any orphaned partitions exist in the cluster. If there are, this topic explains how to resolve them.

Step 4. Confirm the leaf node you want to take offline has an online paired leaf on a different host

To confirm this, run SHOW LEAVES and check the results. Suppose you have a leaf node running on 192.168.1.110 and you want to take it offline. To confirm it has an online paired leaf, run SHOW LEAVES and observe that this node’s paired host is 192.168.1.112 and the paired host is online:

+----------------+------+--------------------+---------------+------------+--------+--------------------+------------------------------+--------+

| Host | Port | Availability_Group | Pair_Host | Pair_Port | State | Opened_Connections | Average_Roundtrip_Latency_ms | NodeId |

+------------+------+--------------------+------------+-----------+--------+--------------------+------------------------------+-------+--------+

| 192.168.1.110 | 3307 | 1 | 192.168.1.112 | 3307 | online | 2 | 0.168 | 6 |

| 192.168.1.112 | 3307 | 2 | 192.168.1.110 | 3307 | online | 2 | 0.255 | 8 |

+------------+------+--------------------+------------+-----------+--------+--------------------+------------------------------+-------+--------+

2 rows in set (0.00 sec)

Step 5: Detach the leaf node(s) from the host to be taken offline for maintenance

A leaf node is detached from a cluster by using the following syntax:

DETACH LEAF'host':port;

For more information on this command see the reference.

Note: If both leaves in a paired group of leaves are detached from the cluster will become unavailable and downtime will be experienced. For this reason only one availability group of leaves should be detached at a time.

Step 6: Detach the aggregator(s) from the host to be taken offline for maintenance

Use the following syntax to detach an aggregator from a host:

REMOVE AGGREGATOR 'host':port;

For more information on this command see the reference.

Step 7: Stop the SingleStore node(s)

Stop the SingleStore node(s) (leaves and aggregators) residing on all hosts that will be taken offline for maintenance. Stop the SingleStore nodes using sql-ops memsql-stop.

For more information on this command see the reference.

Step 8: Stop the Ops follower agent

Stop the Ops follower agent residing on all hosts that will be taken offline for maintenance. You can shut down a follower agent using sql-ops agent-stop OR by SSHing directly to the host and running sql-ops stop.

For more information on this command see the reference.

Step 9: Take the host offline, perform maintenance, bring host back online and confirm SingleStore DB is running

It is now safe to power down the host and perform maintenance. After performing maintenance bring the host back online.

Step 10: Start the Ops follower agent

Start the Ops follower agent residing on all hosts that were previously taken offline for maintenance and are now back online. You can start a follower agent using sql-ops agent-start OR by SSHing directly to the host and running sql-ops start.

Step 11: Start the SingleStore node(s)

Start the SingleStore node(s) (leaves and aggregators) residing on all hosts that were previously taken offline for maintenance and are now back online. Start the SingleStore node(s) using sql-ops memsql-start.

Step 12: Attach the leaf node(s) back to the host that was taken offline for maintenance

Once maintenance is completed, the host is back online and SingleStore DB is running attach the leaf node(s) back to the cluster.

A leaf node is attached to a cluster by using the following command from the master aggregator node:

ATTACH LEAF 'host':port NO REBALANCE;

For more information on this command, see the reference.

Note: If you took multiple leaf nodes offline and are attaching them back to the cluster you can use the reference to attach all detached leaves with one command:

Step 13: Attach the aggregator(s) back to the host that was taken offline for maintenance

Use the following syntax to attach an aggregator from a host:

ADD AGGREGATOR user:'password'@'host':port;

For more information on this command see the reference.

Step 14: Disable manual control of the cluster

Manual cluster control is disabled by running the following command:

memsql-ops cluster-manual-control --disable

For more information on this command, see the reference. The cluster is no longer under manual control. We will now automatically restart stopped nodes, update license files, etc.

Step 15: Run REBALANCE PARTITIONS

After attaching the leaf node(s) to the host run the following command on your master aggregator node for each of your databases:

REBALANCE PARTITIONS ON <db_name_here>;

For more information on this command, see the reference.

Running rebalance partitions will redistribute data across your cluster. In doing so a portion of data in your cluster will be relocated to the SingleStore nodes that were attached in step 12.