MemSQL Streamliner will be deprecated in MemSQL 6.0. For current Streamliner users, we recommend migrating to MemSQL Pipelines instead. MemSQL Pipelines provides increased stability, improved ingest performance, and exactly-once semantics. For more information about Streamliner deprecation, see the 5.8 Release Notes. For more information about Pipelines, see the MemSQL Pipelines documentation.

This section describes common operations to administer a MemSQL Streamliner cluster, including deploying and upgrading a Spark cluster, uploading a custom Spark Interface JAR, and connecting to a remote Spark cluster.

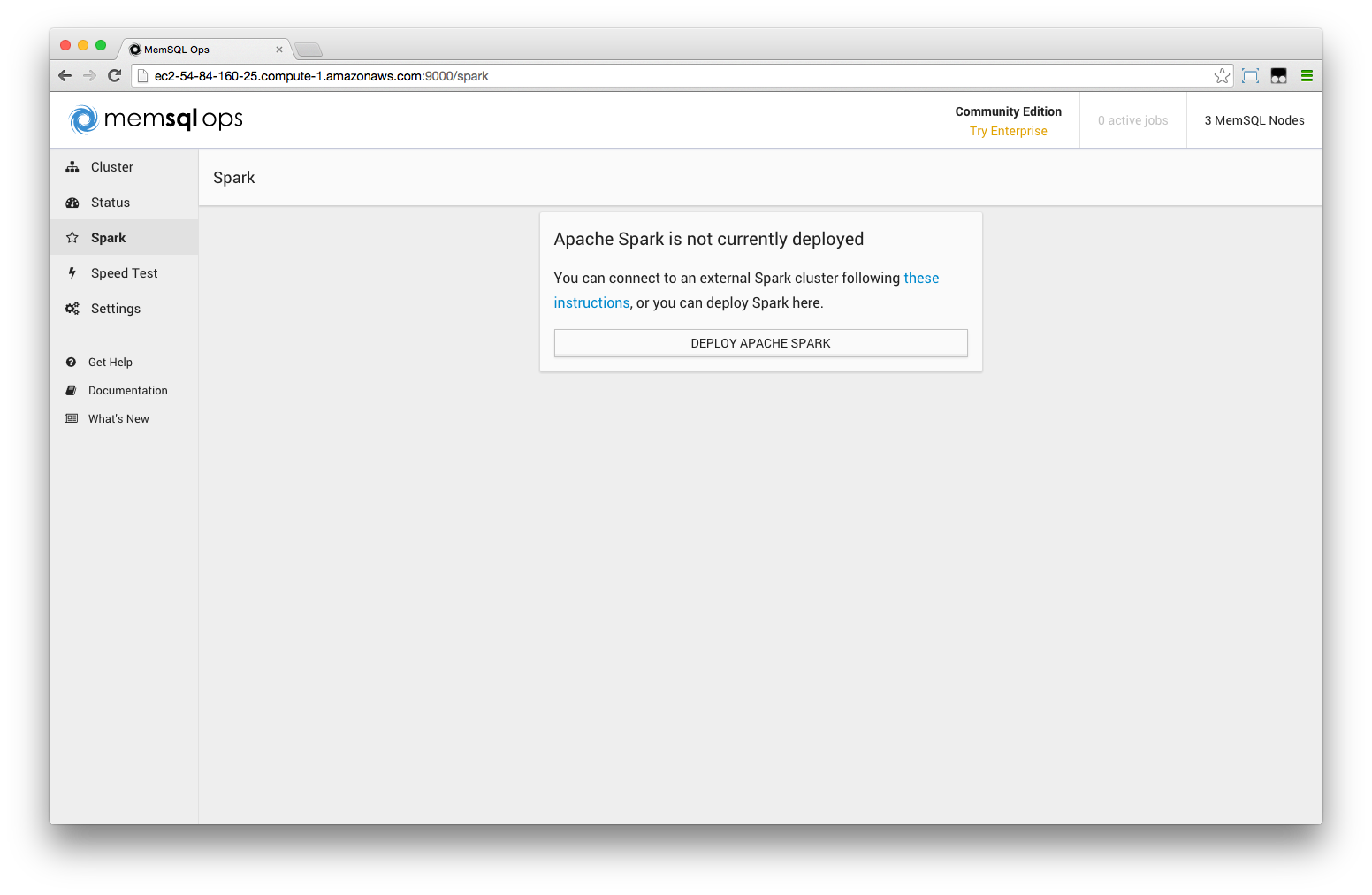

Deploying a Spark Cluster

MemSQL Ops deploys and runs Spark by binding its services starting from port 10001. For Spark to work properly, **we recommend opening ports 10001-10020 within the cluster.**

Optionally, you may want the following services open for public HTTP access:

- MemSQL Streamliner Interface web UI, port 10010 on primary agent

- Spark master web UI, port 10002 on primary agent

- Spark workers web UI, port 10004 on primary agent, 10002 on all other agents

Standard Spark ports are mapped as follows:

- Spark 7777 ->

spark://<master_ip>:10001 - Spark 9090 ->

http://<master_ip>:10002

To deploy a Spark cluster via MemSQL Ops, go to the Spark page and click the deploy button.

Or via MemSQL Ops CLI:

$ memsql-ops spark-deploy [--master-agent-id <agent_id>]

By default, the Spark Master is installed on the MemSQL Ops Primary Agent.

You can specify a different agent via the --master-agent-id option.

If you do not have internet access, you can find the download link for the latest MemSQL-supported Apache Spark distribution here, and run the memsql-ops file-add /path/to/memsql-spark.tar.gz command, followed by the memsql-ops spark-deploy command.

Upgrading a Spark Cluster

When a new version of MemSQL Streamliner is available, the web UI will prompt for upgrade.

Currently, upgrading a Spark cluster via MemSQL Ops will temporarily stop all running pipelines, stop, upgrade and restart all Spark components, and finally restart the pipelines that were running.

You can also upgrade a Spark cluster via MemSQL Ops CLI:

$ memsql-ops spark-upgrade [--version <version>]

Versions are in the form <spark_version>-distribution-<streamliner_version>, for instance 1.4.1-distribution-0.1.2 refers to the integrated release of Spark v1.4.1 and MemSQL Streamliner v0.1.2.

Connecting to a Remote Spark Cluster

MemSQL Ops can connect to a remote Spark cluster, so you can take advantage of MemSQL Streamliner even with an existing Spark cluster.

In this section we assume that you have a MemSQL cluster deployed via MemSQL Ops, and a remote Spark cluster manually managed, i.e. not deployed via MemSQL Ops. We’ll describe how to obtain the MemSQL Spark Interface, run it as a job in the remote Spark cluster, and finally connect MemSQL Ops to the Spark Interface to manage pipelines.

The Spark cluster needs to have port 10009 open (for MemSQL Ops to reach Spark Interface), and the MemSQL cluster needs to have port 3306 open (for the Spark Interface to reach the Master Aggregator and Spark workers to load data into MemSQL).

1. Obtain the Spark Interface

Download the latest version of the MemSQL Spark Interface. You can find the exact version in this page: http://versions.memsql.com/memsql-spark-interface/latest.

The MemSQL Spark Interface is also open sourced, so you can get the code and build it at:

https://github.com/memsql/memsql-spark-connector.

2. Run Spark Interface within the remote Spark Cluster

Run the Spark Interface as a job within the Spark cluster. The Spark Interface needs to connect to MemSQL Master Aggregator, so make sure the port is open (default: 3306).

$ spark-submit --master spark://<spark_master_ip>:7777 memsql_spark_interface.jar --port 10009 --dataDir /path/to/data --dbHost <memsql_master_host> [--dbPort 3306] [--dbUser <user>] [--dbPassword <password>]

3. Connect to the remote Spark Cluster

From MemSQL Ops CLI run:

$ memsql-ops spark-connect -h <host> [-P 10009]

The remote Spark cluster is now connected and you can run any pipeline on it.

Low-level MemSQL Ops Spark Commands

This section details some low-level commands, including managing Spark components, running the Spark Shell, and uninstalling Spark.

Listing, Stopping, and Starting Spark Components

To list the Spark components, run:

$ memsql-ops spark-component-list

ID Agent ID Component Type Host Port Web UI Port State

SC18c6d Ae4734dc9d64447a0b3dbf7b63dc2028c MASTER 10.0.2.68 10001 10002 ONLINE

SC63f92 Ae4734dc9d64447a0b3dbf7b63dc2028c INTERFACE 10.0.2.68 10009 10010 ONLINE

SC2801c A103b4da55a4e4a57bed14434421a5f0c WORKER 10.0.2.25 10001 10002 ONLINE

SC2c087 Ae4734dc9d64447a0b3dbf7b63dc2028c WORKER 10.0.2.68 10003 10004 ONLINE

SCb3ba5 Aa84aa66a25e74ae387620c0577f64b48 WORKER 10.0.3.39 10001 10002 ONLINE

This shows the component and agent ids, component type, host, Spark port, web UI port, and running state.

To stop a running Spark component, run:

$ memsql-ops spark-component-stop [--disable] [--all]

The option --disable will prevent MemSQL Ops from automatically restarting the component.

To start a stopped Spark component, run:

$ memsql-ops spark-component-start [--all]

The commands can also be chained, for instance to stop the Spark Interface you can run:

$ memsql-ops spark-component-list -t interface -q | xargs memsql-ops spark-component-stop

Launching the Spark Shell

By default, the Spark Interface uses all the available cores in the cluster. To run the Spark Shell (and use it) you need to allocate some resources to it, and stop or restart the Spark Interface.

You can run the Spark Shell by limiting the total number of executor cores it will be using. You need to restart the Spark Interface, but the pipelines will be running while the shell is open. Moreover, when the shell is terminated, the Spark Interface will automatically adjust to use all the available cores.

Run the Spark Shell limiting the total number of executor cores:

$ memsql-ops spark-shell --allow-interface -- --total-executor-cores <num>

Note that after the -- you can specify any additional Spark Shell options.

Then restart the Spark Interface:

$ memsql-ops spark-component-list -t interface -q | xargs memsql-ops spark-component-stop

$ memsql-ops spark-component-list -t interface -q | xargs memsql-ops spark-component-start

Uninstalling Spark

To uninstall Spark cluster via MemSQL Ops CLI, run:

$ memsql-ops spark-uninstall