Please follow this guide to learn how to migrate to SingleStore tools.

This topic describes how to upgrade an existing MemSQL cluster to the latest 6.0 patch version (e.g. 6.0.x -> 6.0.y) using MemSQL Ops.

Plans in the plancache are dependent upon the MemSQL version, so when you upgrade to a new MemSQL version, all previously compiled plans will be invalidated.

This means that any queries run against the upgraded cluster will force a one time plan compilation, which results in slower query times the first time those queries are run. After the plans have been recompiled, they will be stored again in the plancache and query latency will return to nominal values.

Prior to upgrading

Taking a backup is strongly recommended as a standard precautionary measure. See Backing Up and Restoring Data for more information.

And to verify your cluster is ready for upgrade, run the following commands from the master aggregator:

SHOW LEAVES;

SHOW AGGREGATORS;

SHOW CLUSTER STATUS;

EXPLAIN RESTORE REDUNDANCY;

EXPLAIN REBALANCE PARTITIONS;

With the output of these commands, confirm that the following are true:

- All leaves are online

- All aggregators are online

- There are no partitions with ‘Orphan’ role

- No Rebalance or restore redundancy is necessary

Upgrading MemSQL Ops

After you have backed up your data and verified your cluster is ready, upgrade MemSQL Ops using the agent-upgrade command:

$ memsql-ops agent-upgrade

If your cluster does not have internet access, find the latest available MemSQL Ops 6.0 version number at http://versions.memsql.com/memsql-ops/latest-v6 and use the payload URL to download it to your local machine.

Then, run the agent-upgrade command and pass in the path to the binary for Ops:

$ sudo memsql-ops agent-upgrade --file-path /path/to/memsql-ops-XYZ.tar.gz

Now you are ready to perform an offline or online upgrade of MemSQL.

Offline Upgrade of MemSQL

The simplest and preferred upgrade option is an offline cluster upgrade. It is the least error prone and easiest to execute; however, it requires downtime as all of the nodes in the cluster will be upgraded at the same time, shutting down the entire cluster for the duration of the upgrade.

Execute the following command to start an offline upgrade:

$ memsql-ops memsql-upgrade [--version <VERSION>]

If you do not specify a version, you will be upgraded to the latest version of MemSQL. Note: You cannot downgrade from your current version.

If your cluster does not have internet access, download the MemSQL binary from the following locations before running the memsql-upgrade command with the --file-path argument:

- For the latest version, the download link is: http://download.memsql.com/releases/latest/memsqlbin_amd64.tar.gz

- For any other version, the download link format is: http://download.memsql.com/releases/version/X.Y.Z/memsqlbin_amd64.tar.gz

$ sudo memsql-ops memsql-upgrade --file-path /path/to/memsqlbin_amd64.tar.gz

For more information on the memsql-upgrade command, see the CLI reference documentation.

Online Upgrade of MemSQL (High Availability clusters only)

If the cluster is running with High Availability, you also have the option to perform an online, incremental cluster upgrade, which maintains cluster availability throughout the upgrade process.

In this procedure, you will upgrade individual nodes in the cluster while other nodes in the cluster are running to maintain cluster availability throughout the upgrade process. It is very important that you follow the upgrade sequence exactly as described in this section: leaf nodes first, then child aggregator nodes, and finally the master aggregator. Unless you require zero downtime, the offline upgrade process is simpler and less error prone.

You must be running MemSQL Ops 5.7 or later to perform an online upgrade with these instructions. Also, it is strongly recommended to avoid running CREATE DATABASE, DROP DATABASE, or ALTER TABLE during the upgrade procedure.

Part 1: Prepare for Upgrade

-

Log into any node and ensure your cluster is running with High Availability (Redundancy Level 2). The

redundancy_levelvariable should be2.memsql> show variables like 'redundancy_level'; +------------------+-------+ | Variable_name | Value | +------------------+-------+ | redundancy_level | 2 | +------------------+-------+ -

Ensure your cluster is healthy, and in particular ensure that all nodes are online and there is both a primary and secondary for each partition; otherwise, you will be unable to keep the cluster available throughout the upgrade procedure. Run SHOW CLUSTER STATUS to retrieve the current state of your cluster.

-

(Recommended but optional) Prior to upgrading, you may run SNAPSHOT DATABASE on any or all databases, which compacts the transaction logs, reducing database recovery time on startup.

memsql> SNAPSHOT DATABASE database;

- On the master aggregator, disable auto-attach and leaf failure detection:

memsql> SET GLOBAL auto_attach = OFF;

memsql> SET GLOBAL leaf_failure_detection = OFF;

- On the master aggregator, disable aggregator failure detection:

$ memsql-ops memsql-list -q -r aggregator master | xargs -L 1 memsql-ops memsql-update-config --key aggregator_failure_detection --value off --set-global

Part 2: Upgrade Leaf Nodes

The simplest way to upgrade the leaf nodes is to upgrade all leaves in Availability Group 1, then all leaves in Availability Group 2. The simple instructions for this method are below, and more complex instructions for alternative options are discussed later in the section Appendix: Additional instructions for Part 2: Upgrade Leaf Nodes.

Upgrade all leaves in Availability Group 1 by following these steps for each leaf node. You can upgrade them all at the same time, which is fastest, but you can also upgrade one or a few at a time if desired.

-

Run SHOW LEAVES to list the host IP and port values for your leaves.

-

Copy the IP address for a leaf in Availability Group 1, and if a port other than the default port (i.e. 3306) is used, copy the port number as well.

-

Run DETACH LEAF from the Master Aggregator to detach the leaf from the cluster using the IP address and optional port value from the previous step.

memsql> DETACH LEAF 'host'[:port];

- List out all of the nodes running MemSQL with

memsql-list. Then, select the detached leaf and copy its ID from the output.

$ memsql-ops memsql-list

- Stop MemSQL on the leaf node.

$ memsql-ops memsql-stop MEMSQL_ID

- Upgrade the leaf using the

memsql-upgradecommand. This process will upgrade the node and restart it automatically. Specifying a version number is optional.

$ memsql-ops memsql-upgrade --skip-snapshot --memsql-id MEMSQL_ID --no-prompt [--version VERSION]

Wait for the MemSQL node to start and recover. You can check whether it is recovering by running ``SHOW DATABASES EXTENDED;`` and seeing if any row has State = `'recovering'`.

- Re-attach the leaf back to the cluster by running

ATTACH LEAF ... NO REBALANCEfrom the Master Aggregator.

memsql> ATTACH LEAF 'host'[:port] NO REBALANCE;

Wait for the attach to complete and the leaf to move to the online status. This may take some time. All leaf nodes must be attached and online before proceeding with the upgrade procedure, i.e. the next `RESTORE REDUNDANCY` or `REBALANCE PARTITIONS` command.

-

Perform steps one through seven for the rest of the leaf nodes in Availability Group 1.

-

Run

RESTORE REDUNDANCYon every database.

memsql> RESTORE REDUNDANCY ON database;

-

Perform the same steps for all of the leaf nodes in Availability Group 2.

-

Run

RESTORE REDUNDANCYon every database after all leaves are re-attached.

memsql> RESTORE REDUNDANCY ON database;

- After you have finished upgrading all the nodes in Availability Groups 1 and 2, run

REBALANCE PARTITIONSon every database.

memsql> REBALANCE PARTITIONS ON database;

For an example on upgrading leaves, see the section Appendix: Additional instructions for Part 2: Upgrade Leaf Nodes.

Part 3: Upgrade Child Aggregator Nodes

- List out all of the nodes running MemSQL with

memsql-list. Then, select the child aggregator you want to upgrade and copy its ID from the output.

$ memsql-ops memsql-list

- Stop MemSQL on the child aggregator.

$ memsql-ops memsql-stop MEMSQL_ID

- Upgrade the node using the

memsql-upgradecommand. This process will upgrade it and restart it automatically. Specifying a version number is optional.

$ memsql-ops memsql-upgrade --skip-snapshot --memsql-id MEMSQL_ID --no-prompt [--version VERSION]

Wait for the MemSQL node to start and recover. You can check whether it is recovering by running SHOW DATABASES EXTENDED; and seeing if any row has State = 'recovering'.

You may upgrade child aggregators in parallel as desired. Keep enough aggregators online at any given time to continue running client queries smoothly.

When upgrading a child aggregator, you will be unable to run queries against that node. Furthermore, it will not see any operations take effect until it is upgraded, so to minimize risks, avoid running DDL operations such as ALTER TABLE while upgrading any of your aggregators.

Part 4: Upgrade the Master Aggregator Node

- List out all of the nodes running MemSQL with

memsql-list. Then, select the master aggregator and copy its ID from the output.

$ memsql-ops memsql-list

- Stop MemSQL on the master aggregator.

$ memsql-ops memsql-stop MEMSQL_ID

- Upgrade the node using the

memsql-upgradecommand. This process will upgrade it and restart it automatically. Specifying a version number is optional.

$ memsql-ops memsql-upgrade --skip-snapshot --memsql-id MEMSQL_ID --no-prompt [--version VERSION]

Wait for the MemSQL node to start and recover. You can check whether it is recovering by running SHOW DATABASES EXTENDED; and seeing if any row has State = 'recovering'.

When upgrading the master aggregator, you will be unable to run queries against that node. This means you will be unable to run DDL operations, DML operations against reference tables, as well as database-level and cluster-level operations.

Part 5: Re-enable Auto Cluster Operations

Restore the auto-cluster operations settings to their initial state.

- On the master aggregator, re-enable auto-attach and leaf failure detection:

memsql> SET GLOBAL auto_attach = ON;

memsql> SET GLOBAL leaf_failure_detection = ON;

- On the master aggregator, re-enable aggregator failure detection by resetting it to the default value

ON:

$ memsql-ops memsql-list -q -r aggregator master | xargs -L 1 memsql-ops memsql-update-config --key aggregator_failure_detection --value on --set-global

Appendix: Additional instructions for Part 2: Upgrade Leaf Nodes

Example

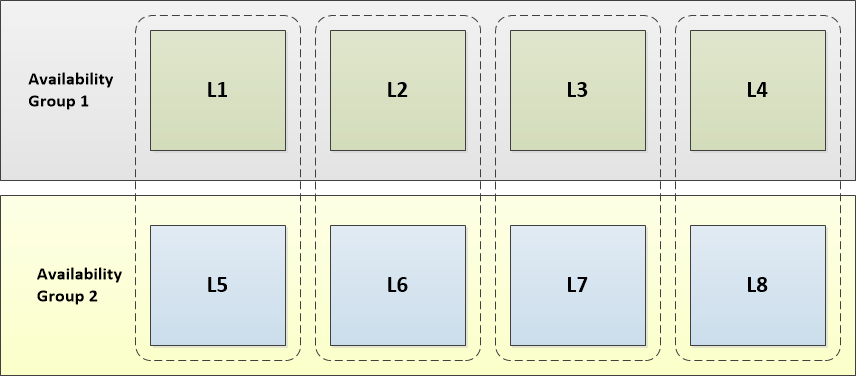

For example: assume we have the following cluster configuration, with 8 leaf nodes in redundancy 2:

There are two availability groups:

- Leaves L1 - L4 are in Availability Group 1

- Leaves L5 - L8 are in Availability Group 2

There are four pairs of leaf nodes:

- L1 and L5

- L2 and L6

- L3 and L7

- L4 and L8

To upgrade these leaf nodes:

- Upgrade Availability Group 1: leaf nodes L1, L2, L3, and L4

- Detach leaf nodes L1, L2, L3, and L4

- Upgrade and start leaf nodes L1, L2, L3, and L4 according to the instructions in steps four through six of the Part 2: Upgrade Leaf Nodes section

- Attach leaf nodes L1, L2, L3, and L4

- Run

RESTORE REDUNDANCYon every database - Upgrade Availability Group 2: leaf nodes L5, L6, L7, and L8

- Detach leaf nodes L5, L6, L7, and L8

- Upgrade and start leaf nodes L5, L6, L7, and L8 according to the instructions in steps four through six of the Part 2: Upgrade Leaf Nodes section

- Attach leaf nodes L5, L6, L7, and L8

- Run

RESTORE REDUNDANCYon every database - Run

REBALANCE PARTITIONSon every database

Upgrading alternate groups of leaves in parallel

To keep the cluster online, at least one leaf in each leaf pair must be online at any given time, so you must upgrade the two nodes in a leaf pair at different times. But upgrading nodes in different leaf pairs may be done in parallel.

The simplest and generally fastest procedure to achieve this is to upgrade all the leaves in one availability group, then the other availability group, as described above. But you can also upgrade leaf nodes in a different order - you do not have to upgrade all nodes in one availability group before the other - as long as you ensure at least one leaf node in each pair is online at all times.

You may split all leaf nodes into multiple groups of leaf nodes, and upgrade group 1, then group 2, and so on, as long as the two leaves in each leaf pair are in different groups. Run RESTORE REDUNDANCY on every database in after each group of upgrades, and run REBALANCE PARTITIONS on every database at the end.